What does the future of ML infrastructure look like?

Mar 19, 2021 6:17 am

Hi !

The reading group I'm starting in collaboration with the MLOps Community will be kicking off tomorrow, March 19 at 5pm UTC! We're discussing the classic Google article "Rules of Machine Learning". If you're interested in participating, join the # reading-group channel in the MLOps Community Slack and subscribe to the update email list here.

The Future of ML and AI Infrastructure and Ethics

Dan Jeffries is the chief technical evangelist at Pachyderm, a leading data science platform. He's a prominent writer and speaker on all things related to the future. He's been in software for over two decades, many of those at Redhat, and is the founder of the AI Infrastructure Alliance and Practical AI Ethics.

In this episode, Dan discusses why he's so excited about the future of machine learning, where it is on the technology adoption curve, the rise of a "canonical stack" of AI infrastructure, and practically approaching the hard problems in AI ethics.

ResNets are back! New training and scaling methods achieve SotA results

"Novel computer vision architectures monopolize the spotlight, but the impact of the model architecture is often conflated with simultaneous changes to training methodology and scaling strategies. Our work revisits the canonical ResNet (He et al., 2015) and studies these three aspects in an effort to disentangle them. Perhaps surprisingly, we find that training and scaling strategies may matter more than architectural changes, and further, that the resulting ResNets match recent state-of-the-art models."

This new paper out of Google Brain and UC Berkeley gathered quite a lot of interest from the community--I've already seen two blog posts providing a summary and commentary on it:

- https://gdude.de/blog/2021-03-15/Revisiting-Resnets

- https://andlukyane.com/blog/paper-review-resnetsr

Now we just have to wait for the inevitable Yannic Kilcher video.

Process large datasets without running out of memory

Itamar Turner-Trauring runs the Python Speed blog, which was previously very helpful to me when I was learning Docker. He now also has an article series on processing large datasets in memory-constrained environments.

It covers topics like efficient code structure, estimating memory requirements for your program, numpy- and pandas-specific strategies, as well as memory profiling. It's been extremely helpful so far and I haven't gotten through the entire series yet!

H/T to Ben from Replicate for linking it in their Discord

For more tips on analysis of large datasets, this older Google Data Science article has stood the test of time.

2021 AI Index Report

Stanford's Institute for Human-Centered AI recently released their 2021 edition of the always-fascinating AI Index Report.

"The AI Index Report tracks, collates, distills, and visualizes data related to artificial intelligence. Its mission is to provide unbiased, rigorously vetted, and globally sourced data for policymakers, researchers, executives, journalists, and the general public to develop intuitions about the complex field of AI."

Click here to read the report.

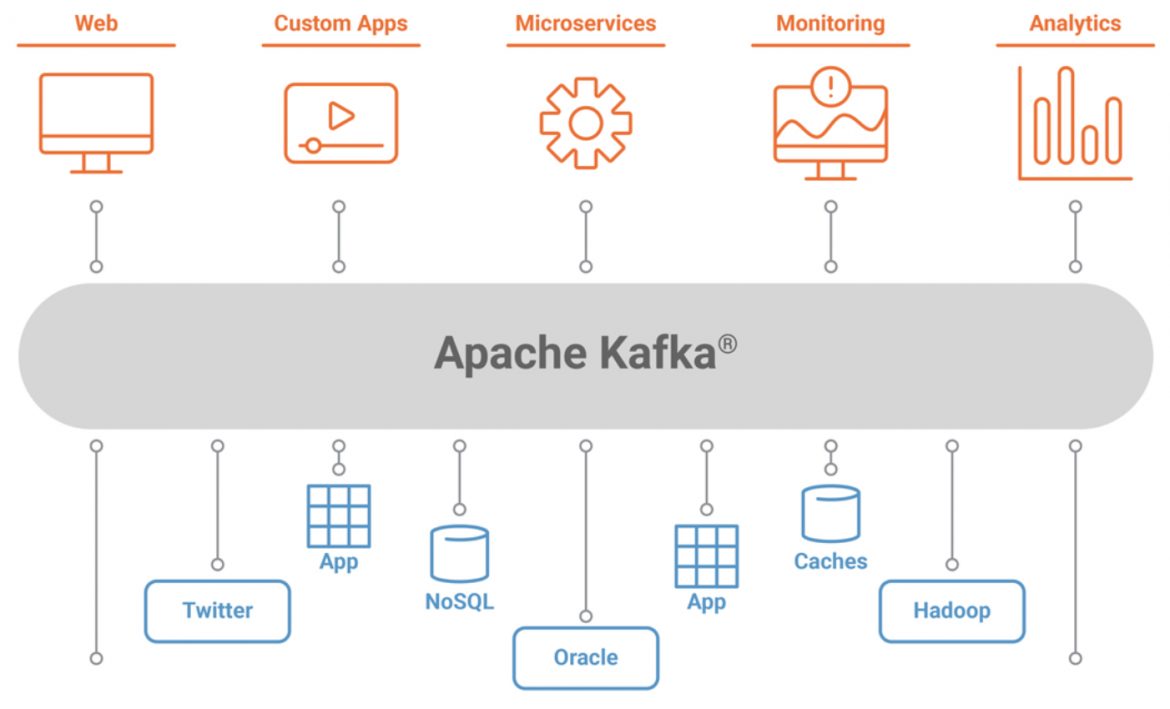

Kafka as a Database?

I recently had to get up to speed with Kafka in order to debug an issue at work. One of the most interesting things I learned in that process was just how many different ways that companies and teams use Kafka. I had quipped in our team's Slack that our usage of it as a datastore seemed like an anti-pattern. I was swiftly corrected by a principal engineer that it was, in fact, a perfectly fine usage of Kafka. That led me to learning all about the pros and cons of that pattern.

Below are three articles presenting the various views held on the issue that I found particularly insightful:

So after reading these, doing I still think it's an anti-pattern? As with many other engineering debates, it depends...

Thanks for reading and have a great rest of your week!

Charlie