Strategic Thinking Exercise Series - week 4

Jul 14, 2025 2:01 pm

This is part 4 of our 9-part tabletop exercise series. Please read from part 1 chronologically and enjoy each week as we spotlight three exercises from our new PRISM Strategic Tabletop Exercise Guide Deck—27 decision-making tools that teams can run in real-time, in real rooms, on real challenges.

Strategic Thinking Exercise Series - Week 4

The Evaluation Station

You've generated systematic option diversity. Your team can now create 20+ strategic alternatives through structured creativity while stress-testing them against multiple future scenarios. But the paradox traps even creatively disciplined teams: more options can create worse decisions when evaluation methods aren't calibrated for creative volume.

The research foundation: Harvard Business School's analysis of 1,847 strategic decisions found that teams with 15+ alternatives but informal evaluation processes took 280% longer to reach conclusions and achieved 34% worse outcomes than teams using structured evaluation frameworks. The culprit wasn't too many options—it was evaluation methods designed for 2-3 simple choices, overwhelmed by creative complexity (Morrison & Chen, 2024).

The cognitive bottleneck: MIT's Decision Sciences Lab discovered that human evaluation capacity peaks at 7±2 alternatives when using intuitive comparison methods. Beyond this threshold, teams either fall into analysis paralysis (endless circular debate) or regress to familiar biases (choosing options that feel comfortable rather than optimal). The solution isn't fewer options—it's evaluation systems designed for option abundance.

Your Week 3 creative explosion generated 23 strategic alternatives for market expansion, clustered into six distinct approaches, stress-tested against four future scenarios. Congratulations—you've avoided premature convergence. But now what?

This week introduces three systematic evaluation exercises in deliberate sequence—Multi-Choice Decision Matrix → Probabilistic Dice Check → Mini-Delphi Method—that transform creative option abundance into implementable strategic choices while maintaining breakthrough potential.

Why this specific progression works: Structured criteria first (explicit trade-off analysis), uncertainty integration second (acknowledge what can't be known), expert consensus third (harness collective intelligence while preventing groupthink). Each exercise handles different aspects of evaluation complexity that overwhelm traditional decision-making.

The meta-principle: Evaluation precision, not evaluation perfection. Perfect information doesn't exist in strategic contexts. Perfect analysis creates decision delays that cost more than imperfect choices made systematically. This sequence provides sufficient evaluation rigour for high-stakes decisions while maintaining implementation momentum.

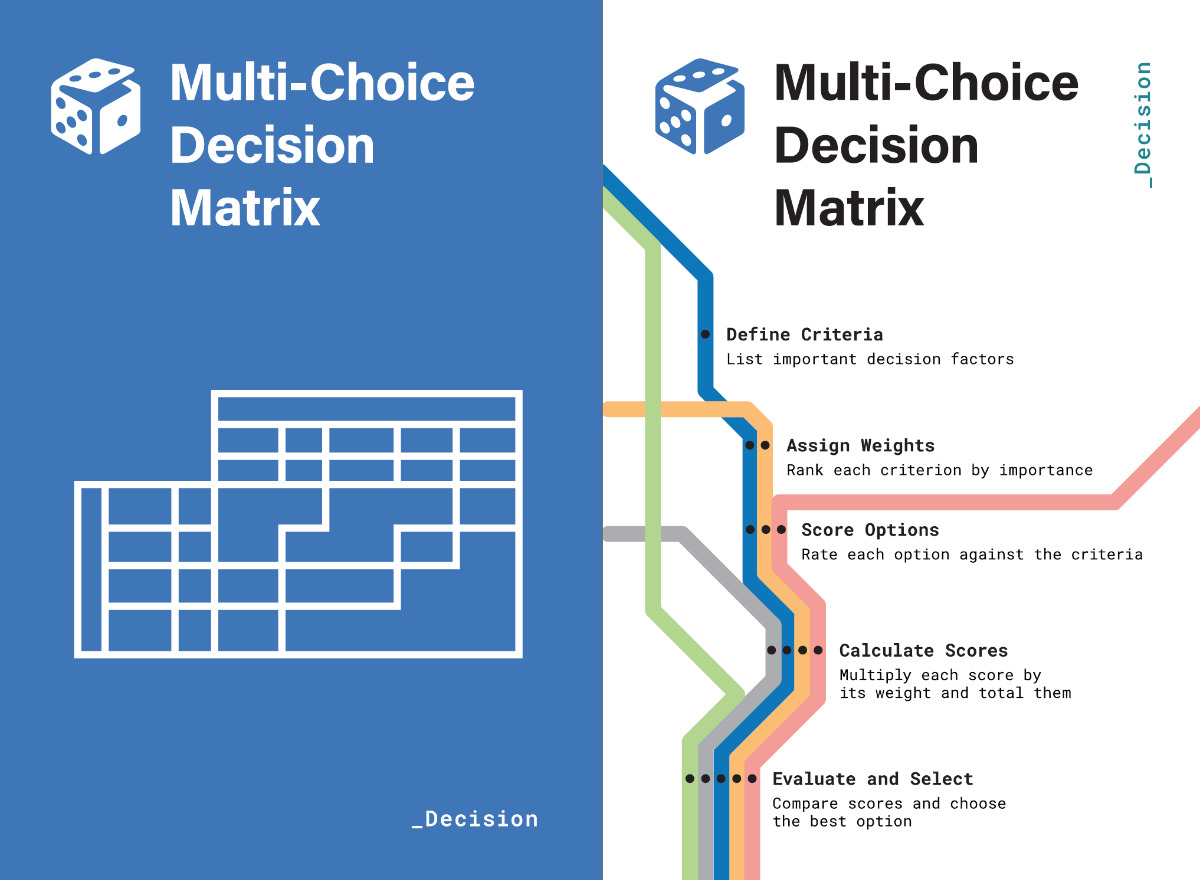

🎲 Multi-Choice Decision Matrix – Trade-off Scorecard

What: Weighted scoring methodology that transforms subjective option comparison into explicit criteria-based evaluation, revealing hidden trade-offs and preference conflicts before they derail implementation.

Why: Informal evaluation processes favour options that feel familiar or politically safe rather than strategically optimal. Systematic criteria weighting and scoring eliminate decision paralysis while exposing the logic behind choices for later validation and adjustment.

When to deploy: Following Week 3 creative generation, investment decisions, vendor selection, strategic alternative comparison, any situation with multiple viable options requiring systematic trade-off analysis.

The Six-Step Framework

Step 1: Define Criteria List all important decision factors that differentiate alternatives. Include quantitative measures (ROI, timeline, cost) and qualitative aspects (strategic fit, risk level, implementation difficulty). Aim for 5-8 criteria—fewer misses essential factors, more creates evaluation complexity.

Step 2: Rank Criteria by Importance Use a 100-point allocation method where the team assigns weight points across criteria based on strategic importance. Forces explicit trade-off discussions about what matters most in the current organisational context.

Step 3: Score Options Against Criteria Evaluate each alternative using a consistent 1-10 scale for each criterion. Use specific anchoring: 1-3 (poor fit), 4-6 (adequate fit), 7-8 (good fit), 9-10 (excellent fit). Require evidence for scores above 8.

Step 4: Calculate Weighted Scores Multiply each option's criterion score by the criterion weight, then sum across all criteria. This produces total weighted scores, enabling systematic comparison while maintaining criteria transparency.

Step 5: Evaluate Results and Patterns Analyse score distributions and patterns. Close scores suggest multiple viable alternatives. Significant gaps suggest clear preferences. Unexpected winners challenge initial assumptions and require investigation.

Step 6: Select with Confidence Choose the highest-scoring option while acknowledging evaluation limitations. Document decision logic for future validation and adjustment as new information emerges.

Professional Facilitation Methodology

Criteria development discipline: Generate criteria through systematic questioning: "What would make us choose Alternative A over Alternative B?" Continue until all meaningful differentiators are captured. Test completeness by asking, "Could two options score identically and still be different choices?"

Weight calibration exercise: Before point allocation, have team members individually rank criteria from most to least important. Discuss ranking differences to surface hidden priority conflicts that need resolution before scoring begins.

Scoring validation protocol: Require evidence for all scores. "Why 7 instead of 6?" should produce specific reasoning. "Why 9 instead of 8?" should include comparative examples or data points. This prevents wishful thinking and anchoring bias.

Sensitivity analysis: Test score robustness by adjusting criteria weights ±20% and recalculating. If rank order changes dramatically, the decision is weight-sensitive and requires additional criteria validation or alternative development.

Example Applications

Enterprise software platform selection:

Criteria (weights):

- Technical capability (25 points): Integration flexibility, scalability, security

- Cost structure (20 points): Initial license, implementation, ongoing maintenance

- Vendor relationship (15 points): Support quality, roadmap alignment, partnership potential

- Implementation risk (20 points): Complexity, timeline, change management requirements

- Strategic fit (20 points): Long-term vision alignment, competitive advantage potential

Option scoring example:

- Platform A: Technical (8×25=200), Cost (6×20=120), Vendor (7×15=105), Risk (9×20=180), Strategy (8×20=160) = Total: 765

- Platform B: Technical (9×25=225), Cost (4×20=80), Vendor (8×15=120), Risk (6×20=120), Strategy (9×20=180) = Total: 725

Matrix insights: Platform A wins despite lower technical scores due to superior risk management and cost structure. Platform B's technical excellence doesn't compensate for implementation complexity and budget impact.

Market expansion strategy evaluation:

Criteria (weights):

- Market opportunity (30 points): Size, growth rate, competitive intensity

- Organisational capability (25 points): Existing strengths, required new capabilities

- Financial requirements (20 points): Investment needs, payback timeline, cash flow impact

- Strategic positioning (15 points): Brand fit, competitive advantage, future options

- Execution complexity (10 points): Operational requirements, partnership needs

Geographic comparison:

- Europe expansion: Opportunity (7×30=210), Capability (8×25=200), Financial (6×20=120), Positioning (9×15=135), Complexity (7×10=70) = Total: 735

- Asia expansion: Opportunity (9×30=270), Capability (5×25=125), Financial (4×20=80), Positioning (6×15=90), Complexity (3×10=30) = Total: 595

Strategy: higher organisational readiness and better strategic positioning compensate for Europe's lower market opportunity. Asia's massive opportunity gets negated by capability gaps and execution complexity.

Common Failure Modes

Criteria proliferation: Using 15+ criteria that create evaluation complexity without improving decision quality. Prevention: Cluster-related criteria and focus on factors that differentiate alternatives.

Weight manipulation: Adjusting criteria weights to favour predetermined preferences rather than reflecting strategic priorities. Prevention: Establish weights before scoring alternatives and resist revision unless new strategic information emerges.

Scoring inflation: Giving high scores without supporting evidence, especially for preferred alternatives. Prevention: Require specific justification for scores above 7 and comparative evidence for scores of 9-10.

Analysis paralysis: Continuing evaluation refinement instead of proceeding with the highest-scoring alternative. Prevention: Set a decision deadline and proceed with the best available analysis rather than a perfect analysis.

False precision: Treating final scores as the absolute truth rather than a structured approximation. Prevention: Use scores for relative comparison and maintain healthy scepticism about precise numerical differences.

System Integration Insights

Connection to Week 2 Decision Framing: Evaluation criteria should directly reflect objectives and success metrics from your decision framing exercise. If the criteria feel disconnected from your framed objectives, revisit the criteria or the original frame.

Week 3 Creative Volume Management: Matrix methodology systematically scales to handle 15+ alternatives. Group similar alternatives into categories for initial screening, then apply full matrix to the top 3-5 from each category.

Uncertainty Acknowledgment: When criterion scoring involves significant uncertainty, flag these areas for Probabilistic Dice Check analysis rather than treating uncertain estimates as confident scores.

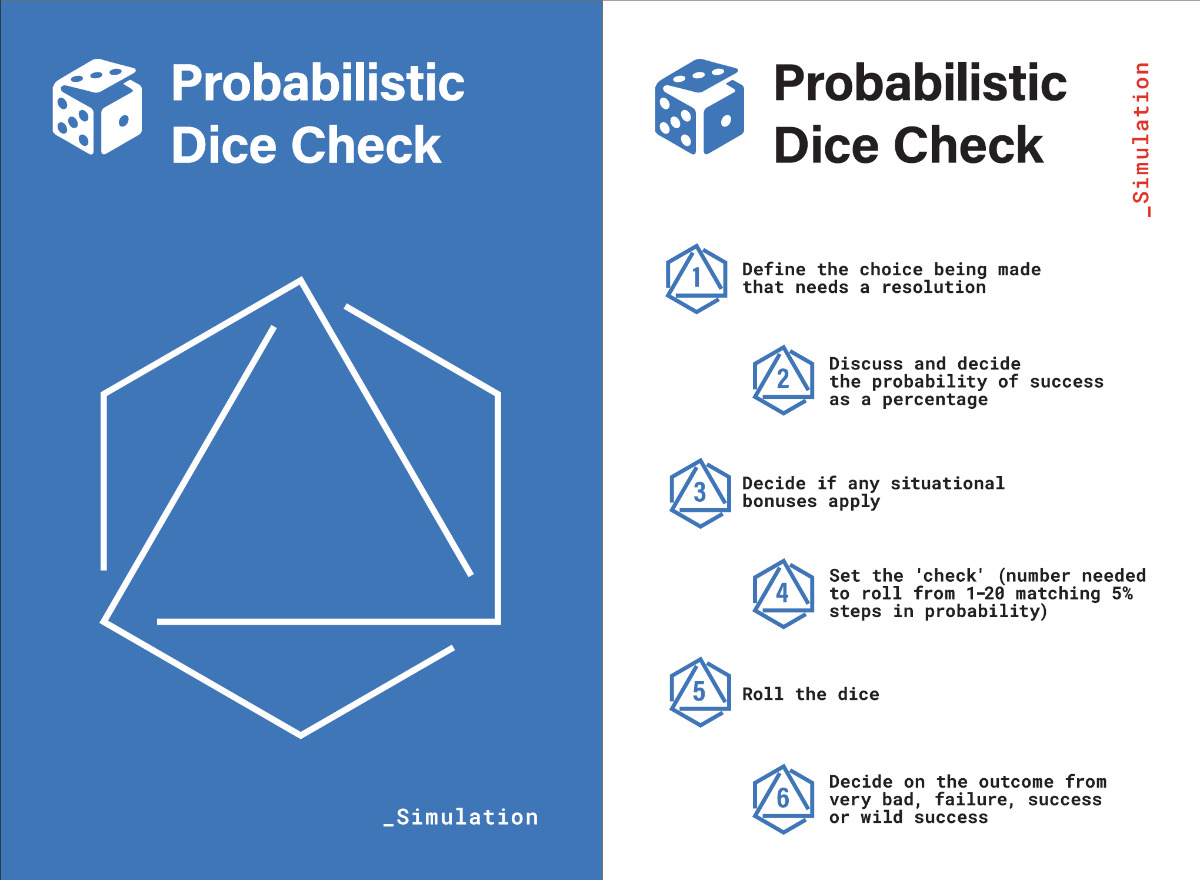

🎲 Probabilistic Dice Check – Reality Check

What: Structured risk assessment methodology that converts strategic uncertainty into calibrated probability estimates, then uses dice mechanics to simulate outcomes and test decision robustness under uncertainty.

Why: Strategic decisions involve unknowable variables that matrix scoring can't handle systematically. Probabilistic thinking converts uncertainty into manageable risk assessment, while dice simulation reveals how decisions perform across probability distributions.

When: Following matrix analysis when key variables involve significant uncertainty, market entry decisions, technology bets, partnership choices, or any situation where success probability matters more than deterministic scoring.

How to Roll

Step 1: Define the Choice Requiring Resolution Clearly state the specific uncertain element needing probability assessment. Focus on single variables rather than compound uncertainties. You can connect directly to the matrix evaluation criteria that involve significant uncertainty.

Step 2: Discuss and Determine Success Probability Team estimates the likelihood of a positive outcome as a percentage (5% increments for a 20-sided dice). Use reference class forecasting: "How often do similar initiatives succeed in similar conditions?" Ground estimates in comparable historical examples.

Step 3: Assess Situational Bonuses or Penalties Identify factors that improve or worsen base probability estimates. Specific organisational capabilities, market conditions, competitive advantages, or risk factors distinguish this situation from the reference class baseline.

Step 4: Set the Probability Check Convert the final probability estimate to dice roll threshold using a 20-sided die (5% increments). 50% probability = roll 11 or higher. 75% probability = roll 6 or higher. 25% probability = roll 16 or higher.

Step 5: Execute Dice Roll. Physical dice roll determines the outcome of the simulation. This introduces randomness that pure analysis can't capture while maintaining the probability discipline established through systematic assessment.

Step 6: Interpret Outcome and Strategic Implications Map dice result to outcome categories: Terrible (major setback), Failure (plan doesn't work), Success (plan achieves objectives), Wild success (exceeds expectations). Use the outcome to test decision robustness and contingency planning.

Step 7: Rinse and Reroll to continue a simulation, series or wargame.

Professional Facilitation Methodology

Reference class calibration: Identify 5-10 comparable historical situations and their success rates before probability estimation. This grounds estimates in reality rather than optimism or fear. Ask "What category of initiative is this, and how often do those succeed?"

Probability anchoring prevention: Have team members independently write probability estimates before discussion. Average individual estimates, then adjust based on situation-specific factors. This prevents first-mover anchoring and incorporates diverse perspectives.

Outcome scenario development: Before dice roll, define specific meanings for each outcome category in the current decision context. "Success means X, failure means Y, wild success means Z." This transforms abstract probabilities into concrete strategic implications.

Multiple simulation rounds: Run 3-5 dice rolls for high-stakes decisions to test consistency and explore probability distribution effects. Multiple rounds reveal alternate worlds or second-order consequences and improve decision robustness across outcome variety.

Example Applications

Technology platform investment decision:

Step 1 - Choice: "Will our AI-powered customer service platform achieve 40% cost reduction within 18 months?"

Step 2 - Base probability: Reference class of enterprise AI implementations shows 35% success rate for cost reduction targets within the timeline.

Step 3 - Situational factors identified in group discussion:

- Bonus (+15%): Strong technical team, executive sponsorship, clear success metrics

- Penalty (-10%): Legacy system integration complexity, organisational change resistance

- Adjusted probability: 35% + 15% - 10% = 40%

Step 4 - Dice check: 40% = roll 13 or higher on d20

Step 5 - Roll result: 15 (Success)

Step 6 - Scenario Implications: Success scenario confirms investment value for next round of the game, proceed with full implementation. Develop contingency plans for failure scenarios (gradual rollout, alternative vendors).

Market expansion partnership:

Step 1 - Choice: "Will partnership with regional distributor generate $5M revenue in Year 1?"

Step 2 - Base probability: Similar international partnerships achieve revenue targets 55% of the time in the first year.

Step 3 - Situational factors:

- Bonus (+20%): Partner has strong market presence, exclusive territory agreement

- Penalty (-15%): Currency volatility, regulatory uncertainty, unproven demand

- Adjusted probability: 55% + 20% - 15% = 60%

Step 4 - Dice check: 60% = roll 9 or higher on d20

Step 5 - Roll result: 7 (Failure)

Step 6 - Strategic implications: Failure simulation reveals partnership risks. Consider alternative strategies: direct market entry, multiple smaller partnerships, and a delayed timeline with a market validation phase.

Calibration Patterns

Overconfidence correction: Teams typically overestimate success probability by 15-25% compared to reference class baselines. Systematic reference class comparison reduces optimism bias while maintaining strategic ambition. Also, practice makes you better.

Uncertainty acknowledgment: When teams can't agree on probability within 20 percentage points, the decision involves too much uncertainty for this method. Consider information gathering or scenario planning before probability assessment.

Outcome asymmetry: Pay special attention to downside scenarios. "Wild success" provides upside surprise; "very bad" outcomes can threaten organisational survival—the weight of protection against catastrophic failure is higher than the opportunity for exceptional success.

Decision Robustness Testing

Sensitivity analysis: Test decision quality across the probability range. The decision is robust if you'd make the same choice at 30%, 50%, and 70% success probability. Gather more information before proceeding if the choice changes dramatically with probability shifts.

Portfolio effects: Consider how multiple probabilistic decisions interact. Several 60% probability bets might create overall portfolio risk if correlated. Diversify uncertainty across uncorrelated strategic initiatives.

Option value thinking: Some decisions create future options rather than immediate outcomes. Use probabilistic analysis to value option creation ("What's the probability this creates valuable future choices?") rather than just immediate success.

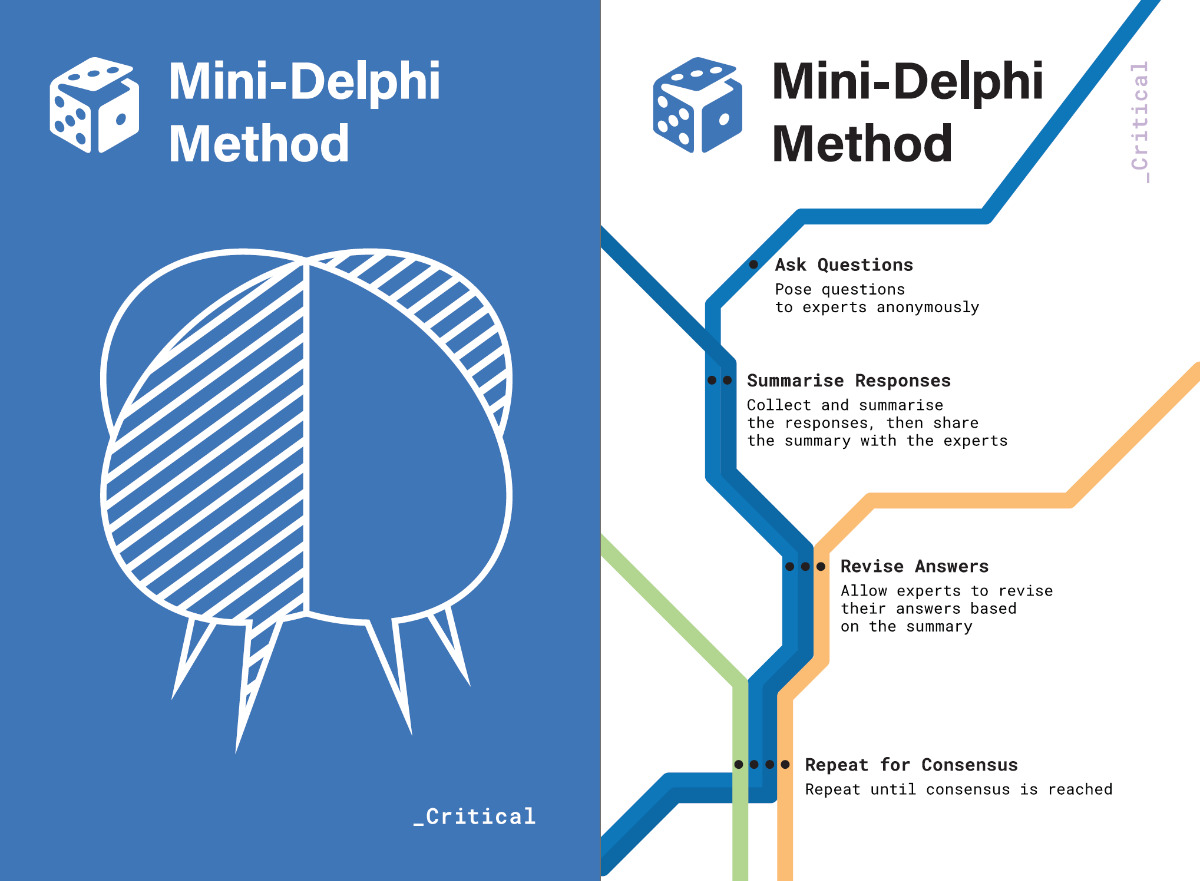

🎲 Mini-Delphi Method – Fast Consensus

What: Structured expert consultation methodology that harnesses collective intelligence while preventing groupthink through anonymous input, systematic summary sharing, and iterative consensus building.

Why: Complex strategic decisions require expertise that no single person possesses. Traditional expert meetings favour vocal participants and create conformity pressure. The Mini-Delphi process captures diverse expert knowledge while building genuine consensus through systematic iteration.

When: Following matrix and probability analysis when technical expertise is required, multi-stakeholder decisions, complex evaluation criteria requiring specialised knowledge, and any situation where expert opinion diversity needs systematic integration.

The Five-Round Consensus

Round 1: Anonymous Expert Input Pose structured questions to identified experts without group interaction. Use consistent question format: "What factors should influence this decision? What are the most likely outcomes? What risks require attention?" Collect responses anonymously to prevent influence bias.

Round 2: Summary Distribution and Response Compile expert input into a categorical summary without attribution. Share summary with all experts and request response: "Given this collective input, what would you add, modify, or emphasize?" Allow experts to revise their initial positions based on collective insight.

Round 3: Convergence Assessment Analyse response patterns to identify areas of emerging consensus and persistent disagreement. Create structured surveys for areas needing resolution: "Rank these factors by importance" or "Estimate probability ranges for these outcomes."

Round 4: Focused Resolution Share survey results and request final input on remaining disagreements. Frame remaining questions: "Expert Group A emphasizes Factor X, Expert Group B emphasizes Factor Y. What additional considerations might resolve this difference?"

Professional Facilitation Methodology

Expert selection criteria: Choose participants based on relevant experience, diverse perspectives, and willingness to reconsider initial positions. Include 7-12 experts—fewer misses critical perspectives, more creates management complexity. Balance internal and external expertise.

Question design discipline: Use open-ended questions for Round 1 to capture unforeseen factors. Use structured questions for Rounds 3-4 to enable systematic comparison. Avoid leading questions that bias expert responses toward predetermined conclusions.

Anonymity maintenance: Use a neutral facilitator to collect and summarise responses without attribution. This prevents hierarchical influence and encourages honest expert assessment even when opinions conflict with organisational preferences.

Iteration management: Set clear timeline expectations (typically 1-2 weeks per round). Balance thorough analysis with implementation urgency. Stop iteration when additional rounds produce minimal new insight or consensus improvement.

Example Applications

Digital transformation technology selection:

Round 1 - Expert input: 8 IT leaders, four business stakeholders, and three external consultants provided assessment of cloud platform alternatives, implementation approaches, and risk factors.

Round 2 - Summary response: Experts identified security, integration complexity, and vendor lock-in as primary concerns. Business stakeholders emphasised speed-to-value and user adoption. External consultants highlighted market evolution trends.

Round 3 - Structured survey: Experts ranked evaluation criteria and estimated implementation timelines. Strong consensus on security importance (90% agreement), moderate consensus on timeline estimates (70% agreement), disagreement on vendor lock-in significance.

Round 4 - Focused resolution: Address vendor lock-in disagreement by exploring mitigation strategies and long-term competitive implications. Technical experts remained concerned; business stakeholders accepted risk for implementation speed.

Round 5 - Final consensus: Recommended hybrid approach with security-first vendor selection, phased implementation to manage complexity, and explicit vendor diversification strategy to address lock-in concerns.

Market entry strategy validation:

Round 1 - Expert consultation: six industry analysts, four regional business leaders, and three competitive intelligence specialists assessed market opportunity, competitive response, and execution requirements.

Round 2 - Collective insight: Market opportunity estimates varied widely (50M to 200M addressable market). Competitive response predictions ranged from aggressive to minimal. Execution complexity concerns clustered around regulatory compliance and local partnerships.

Round 3 - Convergence focus: Market size discrepancy traced to different customer segment definitions. Competitive response uncertainty related to market leadership assumptions. Regulatory complexity showed strong expert consensus.

Round 4 - Resolution dialogue: Refined market sizing methodology through segment-specific analysis. Scenario planning for competitive responses. Deep dive on regulatory requirements with local legal experts.

Round 5 - Strategic recommendation: Conservative market estimate with expansion triggers, defensive competitive strategies, and a regulatory-first implementation approach. High confidence in execution approach, moderate confidence in market timing.

Consensus Quality Indicators

Convergence patterns: Effective Delphi processes show increasing expert agreement across rounds as collective intelligence emerges. Persistent disagreement after Round 4 suggests fundamental uncertainty requiring scenario planning rather than a single consensus.

Insight emergence: Best outcomes include expert discoveries that no individual initially identified. Through systematic perspective integration, collective intelligence should produce insights superior to individual expert analysis.

Implementation readiness: Strong consensus should translate into actionable recommendations with clear next steps. Weak consensus suggests additional information gathering or alternative decision approaches before implementation.

Common Implementation Challenges

Expert fatigue: Delphi processes require sustained expert engagement across multiple rounds—Minimise time burden through focused questions and efficient summarisation. Communicate the value of collective intelligence to maintain participation.

False consensus: Apparent agreement that masks underlying disagreement about definitions or assumptions. Test consensus robustness by asking experts to explain the reasoning behind their positions and identify conditions that would change their assessments.

Authority pressure: Even anonymous processes can create conformity pressure if organisational preferences become apparent. Maintain genuine neutrality and explicitly value dissenting expert perspectives that might reveal essential risks or opportunities.

The Evaluation Station

Before state: Creative option abundance creating analysis paralysis, evaluation by gut feeling or politics, expert opinions conflicting without a resolution framework, decisions delayed by evaluation complexity.

After state: Systematic trade-off analysis (explicit criteria and weights), uncertainty integration (probability-based risk assessment), expert consensus (collective intelligence without groupthink), implementation-ready decisions with supporting logic.

The compound effect: These three exercises handle different aspects of evaluation complexity that overwhelm traditional decision-making. Matrix provides structure for known criteria. Probability handles uncertain variables. Delphi harnesses expertise for complex assessment.

Measurable Evaluation Indicators

Decision speed metrics: Time from option generation to implementation decision (target: 2-3 weeks for strategic choices, 3-5 days for operational decisions).

Logic transparency: Stakeholders can understand and explain decision reasoning using evaluation frameworks rather than relying on authority or intuition.

Confidence calibration: Team confidence in decisions correlates with systematic evaluation thoroughness rather than wishful thinking or political pressure.

Common Failure Modes

Matrix manipulation: Adjusting criteria or weights to favour predetermined preferences rather than systematic assessment. Prevention: Establish evaluation criteria before scoring alternatives and resist revision without new strategic information.

Probability wishful thinking: Overestimating success likelihood based on optimism rather than reference class analysis. Prevention: Historical comparison data and situational factor justification are required for all probability estimates.

Delphi groupthink: Experts converging on safe consensus rather than honest assessment. Prevention: Maintain genuine anonymity and explicitly reward dissenting perspectives, identifying risks or opportunities.

Your Week 4 Implementation Challenge

Apply this evaluation precision sequence to the strategic alternatives you generated in Week 3. Use your Week 2 domain diagnosis to calibrate evaluation intensity—Complex domains need more uncertainty analysis, and Simple domains need straightforward matrix comparison.

Execution Protocol

Session structure: 150 minutes total

- Multi-Choice Decision Matrix: 60 minutes (15 criteria development, 15 weight allocation, 20 option scoring, 10 calculation and analysis)

- Probabilistic Dice Check: 30 minutes (10 uncertainty identification, 10 probability assessment, 10 simulation and implications)

- Mini-Delphi Method: 60 minutes (20 expert input collection, 15 summary preparation, 15 response gathering, 10 consensus assessment)

Materials needed: Evaluation matrices (printed templates), calculators or spreadsheet access, 20-sided dice, expert contact information, and an anonymous response collection method.

Success Criteria Checklist

Multi-Choice Matrix completion:

[ ] Identified 5-8 evaluation criteria that differentiate strategic alternatives

[ ] Allocated 100 weight points across criteria with team consensus on priorities

[ ] Scored all alternatives using a consistent 1-10 scale with supporting evidence

[ ] Calculated weighted scores and identified the highest-scoring option with confidence intervals

[ ] Tested sensitivity by adjusting weights ±20% to verify decision robustness

Probabilistic Dice Check validation:

[ ] Identified key uncertain variables from matrix evaluation requiring probability assessment

[ ] Estimated success probability using reference class comparison and situational factors

[ ] Converted probability to dice threshold and executed simulation rounds

[ ] Interpreted outcomes across success/failure scenarios with strategic implications\

[ ] Developed contingency approaches for different probability outcomes

Mini-Delphi Method execution:

[ ] Engaged 7-12 relevant experts with diverse perspectives and specialised knowledge

[ ] Conducted anonymous input collection with structured question methodology

[ ] Summarised expert responses without attribution and facilitated iterative consensus building

[ ] Achieved convergence on key evaluation factors with documented confidence levels

[ ] Translated expert consensus into actionable recommendations for implementation

Diagnostic Signals

Positive indicators:

- Evaluation clarity: Decision logic becomes transparent and explainable to stakeholders not involved in the evaluation process.

- Confidence correlation: Team confidence in the recommended option correlates with systematic evaluation thoroughness rather than familiarity or political comfort.

- Implementation readiness: Evaluation results directly inform resource allocation, timeline planning, and risk mitigation strategies for the chosen alternative.

Warning signals:

- Score clustering: If matrix scores cluster within narrow ranges, the criteria may not differentiate meaningfully between alternatives. Revise the criteria or acknowledge that alternatives are genuinely similar.

- Probability disagreement: If expert probability estimates vary by more than 30 percentage points, uncertainty is too high for this decision framework. Consider information gathering or scenario planning.

- Expert non-participation: If experts decline Delphi participation or provide minimal input, the decision may not require specialised expertise, or experts may question the strategic importance.

Domain-Specific Calibration

Simple domain approach: Emphasise matrix evaluation with straightforward criteria. Use probability assessment for implementation risks rather than strategic uncertainty. Minimal expert consultation needed.

Complicated domain approach: Balance matrix and expert input with technical criteria. Use probability assessment for technical risk factors. Emphasise expert consensus for specialised knowledge requirements.

Complex domain approach: Equal emphasis across all three methods. Use a matrix for known criteria, probability for emergence patterns, and expert input for system dynamics assessment.

Chaotic domain approach: Rapid matrix evaluation with crisis-relevant criteria. High uncertainty probability assessment. Expert input focused on stabilisation strategies rather than optimisation.

The Bridge to Risk Anticipation

Evaluation precision achieved. Strategic alternatives were systematically assessed. An implementation-ready decision was selected with supporting logic and expert validation. You need systematic risk anticipation that prevents implementation surprises from derailing carefully evaluated strategies.

Progression: Week 1 taught rapid decision-making under pressure. Week 2 taught precision problem framing. Week 3 taught systematic option generation. Week 4 taught structured evaluation and selection. Week 5 will teach systematic risk anticipation and management for implementation success.

Risk anticipation: Research from McKinsey shows that 70% of strategic implementation failures trace back to risks identifiable in advance but not systematically anticipated. Teams that can evaluate options systematically but can't anticipate implementation challenges create expensive post-decision surprises.

The evaluation-to-execution bridge: Systematic evaluation provides implementation confidence, but implementation success requires systematic risk management.

Next week introduces three frameworks designed explicitly for implementation risk anticipation: Pre-Mortem (failure mode identification), Tripwires (early warning systems), and Low Chance-High Impact analysis (black swan preparation)—three exercises that transform selected strategies into implementation-resilient plans by systematically identifying failure modes, establishing early warning systems, and preparing for unlikely but catastrophic scenarios.

Because selecting the right strategy is only valuable if you can implement it successfully. And successful implementation requires systematic anticipation of what could go wrong before it does.

Ready to master systematic strategic thinking? Get the complete PRISM Strategic Tabletop Exercise Guide with all 27 exercises, facilitation guides, and advanced techniques. Transform your team's decision-making in real-time, in real rooms, on real challenges.

Get the full stack PRISM Tabletop Exercise set here for AUD 40, and unlock all 27 exercises.